0. NFS Server for clients with append only option - ideology.

Recently, I am came up with an idea to use NFS for remote clients however with the requirement for remote clients to be able only to append to a file which I created on the NFS share, rather then having full +rw control.

Simply, I did not wanted users or even root on remote server to be able to remove/overwrite a file on NFS share.

I was thinking about mount options however NFS gives you only two = RO and RW.

Therefore, neither of the two matched my requirements.

Neither chattr +a applied on a file worked as this feature is not traversal between two remote FS via NFS protocol.

Unfortunately, linux FS permissions are only RWX and there is no other file permission flag I could use.

I started looking for some sort of ACL on Linux File System but did not find much which could be applicable for my solution.

I asked people on IRC what they think I could do to achieve this, and I found an answer - SElinux.

Obviously, no one had a working PoC I could copy, and nowhere in the Cloud I could find a simple answer.

No articles - why, how, what for and why like about it...

Typical... ! I thought.

Feeling stuck I kicked ass and started combine ideas and compile stack of information I dig about NFS with SElinux and SElinux itself.

Want to see the result ? Aye... ? Let's begin - you will be impressed with what I achieved!

1. Discovery SElinux.

The discovery I made around SElinux was simply huge.

Most of links and materials in the web are either not self explanatory, out of date or not for any good use!

However, you need to read them or something to get familiar with SElinux as this is going to be pretty much the main topic.

I came across materials (and more and more) I referenced below:

https://access.redhat.com/documentation/en-US/RedHatEnterpriseLinux/6/html/Security-EnhancedLinux/index.html

https://www.redhat.com/en/files/resources/en-rhel-selinux-devlopers-120114.pdf

http://freecomputerbooks.com/books/TheSELinuxNotebook-4thEdition.pdf

http://serverfault.com/questions/532981/selinux-how-to-add-a-new-security-context

http://fedoraproject.org/wiki/PackagingDrafts/SELinux#Creatingnew_types

First of all, SElinux is not easy to start with.

Is not very intuitive to use at the beginning however when you practice a lot you will find out its actually not to hard.

It improves over Linux OS security and not by mistake NSA had its dirty hands on it.

I will explain later why I referenced some of those links above.

2. Why NFS with SElinux?

NFS because is the simplest File Share server to setup on linux and it simply do the job.

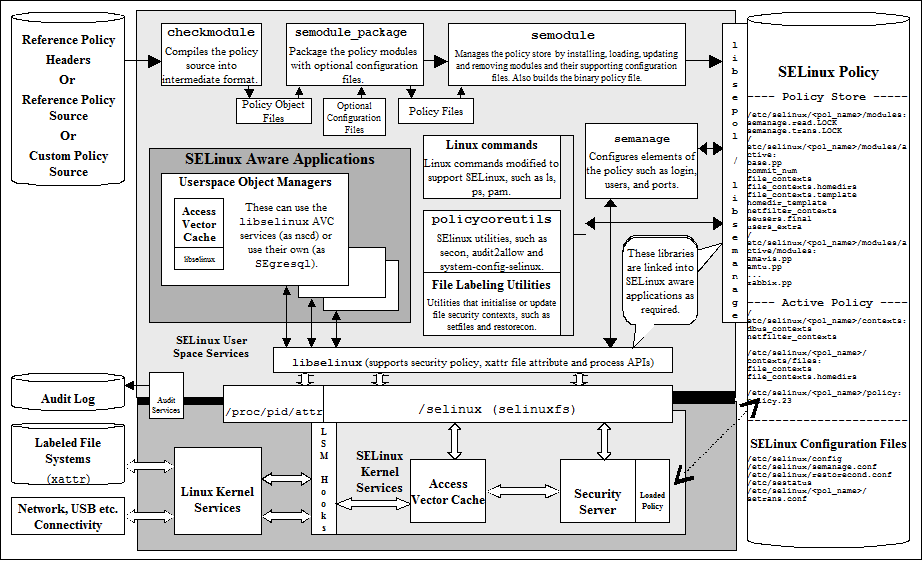

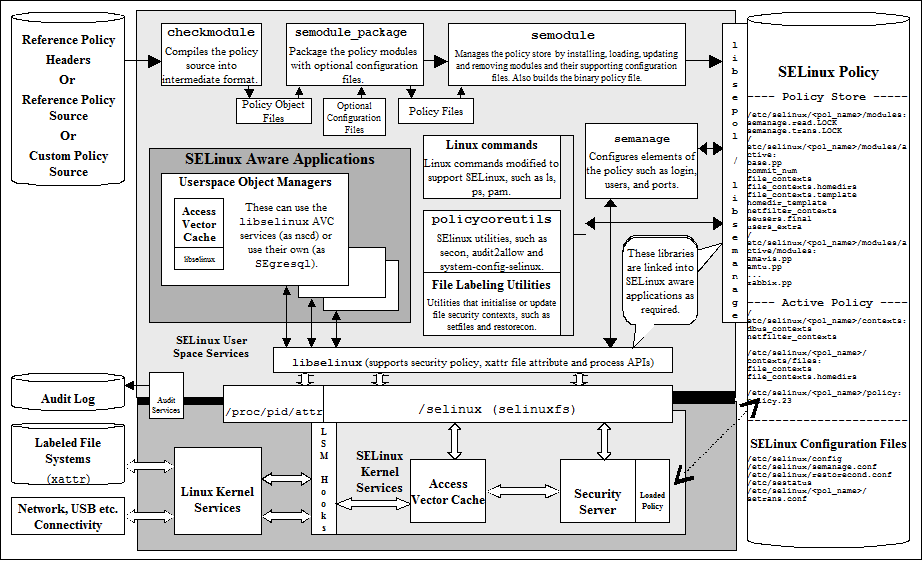

SElinux because it has features and capabilities to limit access to system resources (FS, NET, PROCESSES etc) by manipulation and modification of contexts and policies.

SElinux and its contextual design can be compared to Group Permissions to a process or other system resources from another process or another user.

In common language Group (context) A is allowed to do certain things like "XYZ" on Group (context) B.

How I will use it in my concept I will explain that later.

3. Setting up NFS with SElinux.

The steps how to install and configure NFS Server on CentOS7 you will find out somewhere there without a doubt :)

First thing is to setup NFS Server and Client, enable SElinux on both and do a basic discovery.

Having NFS Server running and a Client running without any NFS Server configuration file modification will lead to mixed contexts applied on Server FS and Client FS as shown below:

Server: nfsec-nfs-srv

[root@nfsec-nfs-srv ~]# ls -alZ /nfs3

drwxr-xr-x. root root unconfined_u:object_r:default_t:s0 .

dr-xr-xr-x. root root system_u:object_r:root_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:default_t:s0 test

-rw-r--r--. root root unconfinedu:objectr:default_t:s0 test

Note: I created /nfs3 in root tree simply to off load it from /var like directories which have its own context (vart for example) and to use the DEFAULT one called defaultt for a clearer picture in this on this post.

Client: nfsec-nfs-clt

[root@nfsec-nfs-clt ~]# mount -t nfs 192.168.100.213:/nfs3 /mnt/nfs/sysdump/

[root@nfsec-nfs-clt ~]# ls -alZ /mnt/nfs/sysdump/

drwxr-xr-x. root root system_u:object_r:nfs_t:s0 .

drwxr-xr-x. root root unconfined_u:object_r:mnt_t:s0 ..

-rw-r--r--. root root system_u:object_r:nfs_t:s0 test

-rw-r--r--. root root systemu:objectr:nfs_t:s0 test

Note: However when I mount it on the client I noticed that client uses native mnt_t context in /mnt/ DIR as well as native nfs_t context for every file withing NFS mounted DIR /mnt/nfs/sysdump like my test file above.

Well, this was very intriguing because how I could use SElinux context policy to allow user to only append to test file on client side

File System whereas different context was applied to the same file on the server side File System.

This is not wright, is it?

I did more digging about NFS daemon "working" contexts inside the OS and I found that on the server side as well as on the client side after installing yum install nfs-utils the contexts are the same!

Server: nfsec-nfs-srv

[root@nfsec-nfs-srv ~]# semanage fcontext -l |grep nfs

/var/lib/nfs(/.*)? all files system_u:object_r:var_lib_nfs_t:s0

/usr/lib/systemd/system/nfs.* regular file system_u:object_r:nfsd_unit_file_t:s0

/usr/sbin/rpc\.nfsd regular file system_u:object_r:nfsd_exec_t:s0

/etc/rc\.d/init\.d/nfs regular file system_u:object_r:nfsd_initrc_exec_t:s0

/usr/sbin/rpc\.mountd regular file system_u:object_r:nfsd_exec_t:s0

[root@nfsec-nfs-srv ~]# netstat -panltZ |grep nfs

tcp 0 0 0.0.0.0:20048 0.0.0.0:* LISTEN 2732/rpc.mountd system_u:system_r:nfsd_t:s0

tcp6 0 0 :::20048 :::* LISTEN 2732/rpc.mountd system_u:system_r:nfsd_t:s0

[root@nfsec-nfs-srv ~]# ps awuxZ |grep nfs

system_u:system_r:nfsd_t:s0 root 2732 0.0 0.0 42692 1812 ? Ss Jan13 0:00 /usr/sbin/rpc.mountd

system_u:system_r:kernel_t:s0 root 2737 0.0 0.0 0 0 ? S< Jan13 0:00 [nfsd4_callbacks]

system_u:system_r:kernel_t:s0 root 2741 0.0 0.0 0 0 ? S Jan13 0:00 [nfsd]

system_u:system_r:kernel_t:s0 root 2742 0.0 0.0 0 0 ? S Jan13 0:00 [nfsd]

Client: nfsec-nfs-clt

[root@nfsec-nfs-clt ~]# semanage fcontext -l |grep nfs

/var/lib/nfs(/.*)? all files system_u:object_r:var_lib_nfs_t:s0

/usr/lib/systemd/system/nfs.* regular file system_u:object_r:nfsd_unit_file_t:s0

/usr/sbin/rpc\.nfsd regular file system_u:object_r:nfsd_exec_t:s0

/etc/rc\.d/init\.d/nfs regular file system_u:object_r:nfsd_initrc_exec_t:s0

/usr/sbin/rpc\.mountd regular file system_u:object_r:nfsd_exec_t:s0

[root@nfsec-nfs-clt ~]# netstat -pantlZ |grep ":2049"

tcp 0 0 192.168.100.207:804 192.168.100.213:2049 ESTABLISHED - -

Note: There is no client or context listed on the client side - its just - and -

[root@nfsec-nfs-clt ~]# ps awuxZ |grep nfs

system_u:system_r:kernel_t:s0 root 1010 0.0 0.0 0 0 ? S< Jan13 0:00 [nfsiod]

system_u:system_r:kernel_t:s0 root 5074 0.0 0.0 0 0 ? S 10:20 0:00 [nfsv4.0-svc]

In summary, excluding RPC which does its job to bind procedures and mount points we have at least 3 context of interest: nfs_t, nfsd_t and kernel_t.

This tells me that the NFS operation between client and a server is being made with use of these 3 contexts, which is what I will be using later to achieve my PoC.

This tells me that it would be also great if NFS shared files will be matching contexts between the server and client!

In reality they don't be default but it is possible to achieve this.

The outstanding task to do before I start is to find out a way for mounted FS context to be matching between client and a server...

4. NFS SElinux context Traversal. (SElinux Labeled NFS Support)

RedHat / CentOS have a build in support for this type of requirement.

To achieve this I found RH documentation explaining how to do it:

https://access.redhat.com/documentation/en-US/RedHatEnterpriseLinux/7/html/SELinuxUsersandAdministratorsGuide/sect-ManagingConfinedServices-NFS-ConfigurationExamples.html

This is basic and requires 2 steps to do.

1st - Edit /etc/sysconfig/nfs and add flag -V 4.2 and restart NFS Server.

Server: nfsec-nfs-srv

[root@nfsec-nfs-srv ~]# vi /etc/sysconfig/nfs

# Optional arguments passed to rpc.nfsd. See rpc.nfsd(8)

RPCNFSDARGS="-V 4.2"

2nd - Mount NFS Share on the NFS Client as follows:

Client: nfsec-nfs-clt

[root@nfsec-nfs-clt ~]# mount -o v4.2 192.168.100.213:/nfs3 /mnt/nfs/sysdump/

Now the context of the files on the source and destination will be the same.

The above ".." parent directory context will be different on each server but we don't care about that.

Server: nfsec-nfs-srv

[root@nfsec-nfs-srv ~]# ls -alZ /nfs3

drwxr-xr-x. root root unconfined_u:object_r:default_t:s0 .

dr-xr-xr-x. root root system_u:object_r:root_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:default_t:s0 test

Client: nfsec-nfs-clt

[root@nfsec-nfs-clt ~]# mount -t nfs 192.168.100.213:/nfs3 /mnt/nfs/sysdump/

[root@nfsec-nfs-clt ~]# ls -alZ /mnt/nfs/sysdump/

drwxr-xr-x. root root unconfined_u:object_r:default_t:s0 .

drwxr-xr-x. root root unconfined_u:object_r:mnt_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:default_t:s0 test

Now we can truely begin the PoC.

5. PoC - NFS Share with Append-Only option!

I am finally getting to the bottom of this however still having that feeling like this is just the beginning :)

You will not find anywhere else in the Net how to achieve this so I will take it as a good motivational starting point to write about it.

Why Append-Only? Why this is so important and why it is so beneficial?

I will let you answer these questions for yourself. I certainly did a good use out of it, and this post is about how I achieved the working PoC.

Remember one thing - NFS Server and native Linux FS does not have the ability to create ACL for Append-Only file permission.

As I said before we need to use SElinux to create let's say an Access Control List of what we NFS Server and Client can do with a file.

Imagine that the SElinux contexts are like groups. Group A can only do { read open append } on Group B.

Holla...?

So, what is our Group A and what is our Group B - what is this guy talking about?

Our Group A is NFS Server + Kernel + Client. ( SELinux: nfsdt, kernelt, nfs_t )

Our Group B is New SElinux Type.

We will create a new SElinux type ("Group") which will allow Group A to do only certain operations on it.

The concept is to create a new SElinux Type called: nfspol_t and let context types from Group A nfsd_t, kernel_t and nfs_t do only certain allow operations on it like { read open append } for example.

What you going to see further below is a SElinux PORN - do not watch it - or watch it on your own responsibility.

Yeah... this guy talks bollocks - How am I going to create a new SElinux Type?! :]

Here is where I should refer you to point 1 to the references I made.

You will not find anywhere an easy to read material how to use SElinux.

Trust me I tried to read through all of these docs, books and posts to find the answers how to create new SElinux type and all I found was useless.

All my findings just gave me an idea about what I would need to do, but no raw code blocks ready to use - non of it worked.

I had to experiment on my own to get this bloody dilemma solved.

So again what we will need is a new SElinux Type to create ACL like policy between files in NFS DIR and NFS Server and a Client.

6. NFS Server - Shared Directory new SElinux Type and Policy

I will not go through every troubleshooting step I made to get to the working PoC.

Below you will find me creating new SELinux Type called nfspol_t however in the policy TE (Type Enforcement) file you will find all the applicable allow rules required for the NFS Server side.

Bare in mind that NFS is a protocol linking two machines server and a client therefore each of them will need its own SELinux module created and installed for this to work the way I want. SElinux module for the client you will find under point 7.

Here is the NFS Server nfspol.te file and installation instructions:

################## NFS SERVER POLICY TE BEGIN ###################

module nfspol 1.0;

type nfspol_t;

require {

type nfs_t;

type nfsd_t;

type unconfined_t;

type kernel_t;

type fs_t;

class file { ioctl read getattr lock append open write execute relabelto relabelfrom setattr unlink rename };

class dir { search read getattr relabelto relabelfrom write rmdir open };

class filesystem associate;

}

allow nfspol_t self:file { ioctl read getattr lock append open };

allow nfspol_t nfs_t:file { ioctl read getattr lock append open };

allow nfspol_t nfsd_t:file { ioctl read getattr lock append open };

allow nfspol_t unconfined_t:dir { search getattr };

allow nfspol_t unconfined_t:file { read open };

#============= unconfined_t ==============

allow unconfined_t nfsd_t:file relabelto;

allow unconfined_t nfspol_t:dir { getattr search read relabelto open relabelfrom rmdir write };

allow unconfined_t nfspol_t:file { getattr open append relabelto write relabelfrom unlink rename read };

#============= nfspol_t ==============

allow nfspol_t fs_t:filesystem associate;

#============= kernel_t ==============

allow kernel_t nfspol_t:dir getattr;

allow kernel_t nfspol_t:file { getattr read open append lock write unlink rename };

################## NFS SERVER POLICY TE END ###################

yum install policycoreutils-python

yum install selinux-policy-devel

mkdir -p /usr/src/selinux-devel

cd /usr/src/selinux-devel/

ln -s /usr/share/selinux/devel/Makefile .

ls -l nfspol.te

checkmodule -M -m -o nfspol.mod nfspol.te

semodule_package -m nfspol.mod -o nfspol.pp

semodule -i nfspol.pp

Voilla! ;]

semanage fcontext -a -t nfspol_t /nfs3/test

OR

chcon -t nfspol_t /nfs3/test

restorecon -v /nfs3/test

[root@nfsec-nfs-srv ~]# ls -alZ /nfs3

drwxr-xr-x. root root unconfined_u:object_r:default_t:s0 .

dr-xr-xr-x. root root system_u:object_r:root_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:nfspol_t:s0 test

-rw-r--r--. root root unconfinedu:objectr:nfspol_t:s0 test

Hurraayyy! We have nfspol_t TE applied on a file inside NFS Share DIR.

What do we have on the Client Side then?

[root@nfsec-nfs-clt ~]# ls -alZ /mnt/nfs/sysdump/

drwxr-xr-x. root root unconfined_u:object_r:defaut_t:s0 .

drwxr-xr-x. root root unconfined_u:object_r:mnt_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:unlabeled_t:s0 test

-rw-r--r--. root root unconfinedu:objectr:unlabeled_t:s0 test

Oops... We have unlabeled_t assigned to the same file...

How? Should not the NFS Server transfer the SElinux label to the Client?

It will but to do so we need to apply SElinux module on the client as well - however with slightly different TE rules.

7. NFS Client - Mount Directory with new SElinux Type and Policy matching the Server-Side

Here is the NFS Client nfspol.te file and installation instructions:

################## NFS CLIENT POLICY TE BEGIN ###################

module nfspol 1.0;

type nfspol_t;

require {

type nfs_t;

type nfsd_t;

type unconfined_t;

type fs_t;

type kernel_t;

type initrc_t;

class file { ioctl read getattr lock append open relabelto setattr execute };

class dir { search getattr read relabelto };

class filesystem associate;

}

allow nfspol_t self:file { ioctl read getattr lock append open };

allow nfspol_t nfs_t:file { ioctl read getattr lock append open };

allow nfspol_t nfsd_t:file { ioctl read getattr lock append open };

allow nfspol_t unconfined_t:dir { search getattr };

allow nfspol_t unconfined_t:file { read open };

#============= unconfined_t ==============

allow unconfined_t nfsd_t:file relabelto;

allow unconfined_t nfspol_t:dir { relabelto read getattr search };

allow unconfined_t nfspol_t:file { read open append relabelto getattr };

#============= nfspol_t ==============

allow nfspol_t fs_t:filesystem associate;

#============= kernel_t ==============

allow kernel_t nfspol_t:dir getattr;

allow kernel_t nfspol_t:file { getattr read open append };

#============= initrc_t ==============

allow initrc_t nfspol_t:file { append open };

################## NFS CLIENT POLICY TE END ###################

yum install policycoreutils-python

yum install selinux-policy-devel

mkdir -p /usr/src/selinux-devel

cd /usr/src/selinux-devel/

ln -s /usr/share/selinux/devel/Makefile .

ls -l nfspol.te

checkmodule -M -m -o nfspol.mod nfspol.te

semodule_package -m nfspol.mod -o nfspol.pp

semodule -i nfspol.pp

Voilla! ;]

Finally, now I have matching context on each side!

[root@nfsec-nfs-srv ~]# ls -alZ /nfs3

drwxr-xr-x. root root unconfined_u:object_r:default_t:s0 .

dr-xr-xr-x. root root system_u:object_r:root_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:nfspol_t:s0 test

[root@nfsec-nfs-clt ~]# ls -alZ /mnt/nfs/sysdump/

drwxr-xr-x. root root unconfined_u:object_r:defaut_t:s0 .

drwxr-xr-x. root root unconfined_u:object_r:mnt_t:s0 ..

-rw-r--r--. root root unconfined_u:object_r:nfspol_t:s0 test

8. Testing

[root@nfsec-nfs-srv ~]# cat /nfs3/test

1

2

[root@nfsec-nfs-clt ~]# cat /mnt/nfs/sysdump/test

cat: /mnt/nfs/sysdump/test: Permission denied

[root@nfsec-nfs-clt ~]# echo "3" >/mnt/nfs/sysdump/test

-bash: /mnt/nfs/sysdump/test: Permission denied

[root@nfsec-nfs-clt ~]# echo "3" >>/mnt/nfs/sysdump/test

[root@nfsec-nfs-clt ~]# #Looks like it works! Let's check what is on the server.

[root@nfsec-nfs-srv ~]# cat /nfs3/test

1

2

3 <<< HERE is the HOAX! ;] Magic

9. Summary

At this point I was fighting with modules and allow rules in the .te file.

I could not get echo "BLA" >>/mnt/nfs/sysdump/test (APPEND) or even echo "BLA" >/mnt/nfs/sysdump/test (OVERWRITE) to work at all.

It all started to work the way I wanted (APPEND-ONLY) only when I added { write } flag for kernel_t and unconfined_t in allow statement for nfspol_t context on the server side.

What it did, it allowed client to write via NFS Protocol to the local FS on the server so that the client local { append } became functional.

In other words for client to be able to append to NFS Share file it needs to have a module installed with { append } and allow from kernel_t, unconfined_t to nfspol_t, however at the same time that IO needs to be able to write to the file on the server hence why on the server side kernel_t and unconfined_t needed { write } flag as well :>

Server:

[..]

allow unconfined_t nfspol_t:file { getattr open append relabelto write };

allow kernel_t nfspol_t:file { getattr read open append lock write unlink };

Client:

[..]

allow unconfined_t nfspol_t:file { read open append relabelto getattr };

allow kernel_t nfspol_t:file { getattr read open append };

Crazy stuff!

Another Working PoC achieved - Hope you enjoyed it - good bye :D

-- lo3k